By Thibault Jamet (Technical Product Owner) and Miguel Bernabeu (Site Reliability Engineer)

At Adevinta, we operate an Internal Developer Platform, The Cloud Platform, where Software Engineers across our marketplaces can deploy and manage their microservices. These microservices run on top of SCHIP, an internal platform built on Kubernetes.

One of our value propositions is that security fixes and cluster optimisations are attempted once for the benefit of all our tenants. This approach helps maximise our resources by ensuring teams are not duplicating work to solve the same problems.

One way we reduce computation costs in the current AWS landscape is to use Graviton instances using the ARM CPU architecture. In this article, we explain how, in SCHIP, we provide a best-in-class experience to run ARM workloads alongside the x86 architecture to more than 4,000 developers. They can then reduce their infrastructure costs as transparently and gradually as possible.

Note: For the sake of simplicity, we will use the generic terminology “ARM” to designate the family of processors using the ARM architecture, should it be 32 or 64 bits or specific implementations like AWS Graviton instances. Similarly, we will use “x86” to designate the family of processors using the Intel x86 architecture, whether 32 or 64 bits or specific implementations like Intel or AMD processors.

Tackling ARM and x86 Challenges in Kubernetes

The ARM architecture differs from the x86. All executable applications must be built explicitly for the desired CPU architecture, either x86 (the most common offering) or ARM.

Suppose a container built for one CPU architecture gets scheduled to another one. In that case, the workload will immediately fail once execution starts with an error such as “exec /opa: exec format error”.

In Kubernetes, the standard way to ensure the application runs on the relevant CPU architecture is to use a node selector or to configure node affinities. The Kubernetes scheduler then considers the kubernetes.io/arch node label populated by the kubelet.

In the case of our existing platform, most of the workload is running without node selectors or affinity. With our current scale, this means changing the deployment manifests of 5,000 applications before being able to provide ARM nodes to our users. This turns a simple cost optimisation project into a multi-month and cross-team project to synchronise the availability of the new node architecture.

With SCHIP, we have users with different backgrounds, from pure Software Developers to System Engineers, who deploy their applications very differently. While some of our users have control of the deployed manifests, others do not, as they use abstractions on top of Kubernetes or even Kubernetes operators that may not allow using node selectors.

Effortless ARM Integration

With all of this in mind, our goal with this project was to deliver, in the safest manner possible, nodes with the ARM architecture alongside the x86 architecture for all of our users, regardless of their knowledge or ability to configure the node selectors for their applications.

We also wanted to minimise the number of dependencies and cross-team synchronisation points of this project to minimise the time to market for this feature.

Streamlining ARM Adoption

With our situation, we identified two actions users would need to take to run on ARM CPUs; publish their container images for ARM and configure the Kubernetes node selector to use ARM nodes.

In our Cloud Platform, we provide the container runtime but have no control over how container images are built. Long-term, we wanted to ensure we build only architectures that we can use and are willing to support — but providing an ARM architecture image and node selector made this redundant. Instead we looked for a way to drop the need for our developers to manually inject the node selector.

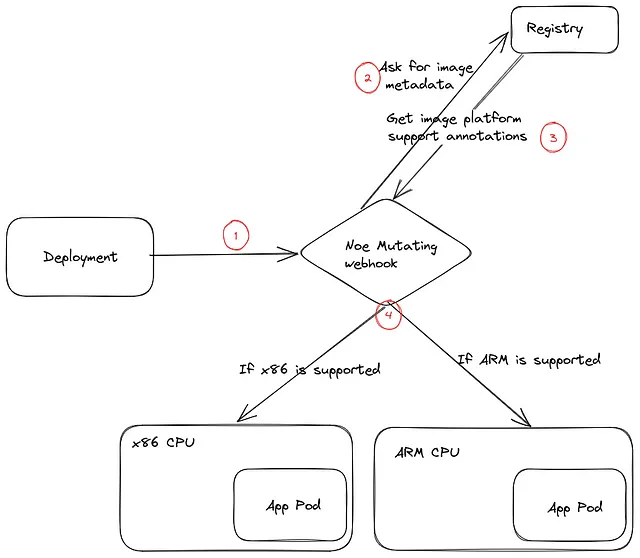

Our solution was to create a mutating webhook that adjusts node affinities based on the container images’ supported architectures.

When creating a new Pod in the Cluster, the webhook will be called to review and adjust the Pod spec as needed. It then takes all the images for the Pod and checks with their registry for the metadata on those images for the supported CPU architectures. It will then add node affinities to the Pod according to the supported CPU architectures, ensuring it will only be scheduled in nodes capable of executing all the images.

This approach covers our end goal, with mixed architectures and applications supporting a subset of those. We must consider that some Pods have multiple images, all of which must support a common architecture.

Insights and lessons learned

Optimising multi-arch builds

The need for multi-architecture support in our Kubernetes deployments become evident when using DaemonSets, which ensures that a specific set of pods runs on all nodes within the Cluster. To accommodate different CPU architectures, we must create container images supporting ARM and x86.

Our CI runs on x86, so we must find an efficient way to build multi-architecture images. We discovered that using Go cross-compilation is significantly more efficient than relying on Docker’s target platform emulation.

Consider the following example. The traditional Dockerfile, which relies on Docker’s target platform emulation, is less efficient:

Dockerfile

FROM golang as build

# copy code

RUN go build -o /my-app /src

FROM scratch

COPY --from=build /my-app /my-appInstead, using Go cross-compilation in conjunction with the Docker platform flag, we can optimise the build process:

FROM - platform $BUILDPLATFORM golang as build

# copy code

RUN GOARCH=$TARGETPLATFORM go build -o /my-app /src

FROM scratch

COPY - from=build /my-app /my-appBy adopting this approach, we can significantly improve the efficiency of our build process while ensuring that our container images are compatible with both ARM and x86 architectures, ultimately facilitating seamless mixed-architecture deployments.

Mutating webhook latency matters

When implementing the webhook, we quickly realised how important mutation latency would be in the solution. When mutations are too slow, we affect the capability of the Cluster to perform horizontal pod autoscaling and to adapt to the current computing load.

We considered this point in the early stages of conception. We realised that downloading the whole image for each pod creation would play against our low latency objectives and affect our scaling and operational efficiency. We decided to restrict our reads to the image metadata only without downloading all image layers.

This reduces the overhead and speeds up supported architecture determination. If the image does not support multi-arch, we default to the x86 architecture to maintain compatibility.

CPU architecture matters for application performances

We discovered that a fully transparent ARM migration is not feasible due to its complexity and potential operational risks. For instance, in our experience, ARM is less efficient in performing particular tasks, such as cryptographic operations. Therefore, we decided to favour a single CPU architecture. If the image supports both x86 and ARM, we will always default to x86 to ensure consistency of behaviour across all pods of a given deployment version.

Switching architecture needs developer actions

The issue of adoption remains. A CPU architecture change can range from trivial for some applications to highly critical to others, depending on the use cases. Software experts should decide on the CPU architecture, expressed with the platforms supported by the image.

Our users require time to test and tune their workloads before releasing such a change to Production with the best cost-performance ratio. We select x86 unless overridden at the Pod level, allowing them to control which applications to test and deploy in each architecture. We can undo this default once applications have decided which CPU architecture serves them best or if it is not relevant.

$ git diff

diff --git a/Dockerfile b/Dockerfile

index e4ff736..de150e9 100644

--- a/Dockerfile

+++ b/Dockerfile

@@ -1,5 +1,5 @@

-FROM golang as build

+FROM --platform $BUILDPLATFORM golang as build

# copy code

-RUN go build -o /my-app /src

+RUN GOARCH=$TARGETPLATFORM go build -o /my-app /src

FROM scratch

COPY --from=build /my-app /my-appReleasing Noe, our transparent architecture selector

We understand the value of collaboration and the power of the open-source community.

We are excited to announce that we are open-sourcing Noe, the key component that enables the progressive and transparent switch between ARM and x86 architectures within our Kubernetes clusters. By sharing this solution with the broader community, we hope to encourage further innovation and help other organisations overcome the challenges of mixed-architecture deployments.

Contributing our component to the open-source ecosystem invites developers and organisations to collaborate, improve and build upon our solution. We believe that together, we can create more efficient and adaptable Kubernetes environments that cater to the diverse needs of users across various industries.

So, join us in our mission to simplify mixed-architecture deployments and harness the full potential of ARM and x86 in Kubernetes.